The term "deepfake" has moved from the fringes of the internet to the center of corporate security concerns. What was once a novel technology for generating amusing video clips has evolved into a sophisticated tool for fraud, disinformation, and social engineering. For IT security professionals, understanding the mechanisms behind deepfakes is no longer an academic exercise—it is a critical requirement for defending the enterprise. A manipulated video of a CEO announcing a fake merger or a fabricated audio clip of a CFO authorizing a fraudulent wire transfer can cause irreversible financial and reputational damage. This guide provides a structured overview of deepfake technology, from its creation to its detection, equipping you with the knowledge needed to fortify your organization's defenses against this advanced threat.

What Are Deepfakes?

Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else's likeness. The term is a portmanteau of "deep learning" and "fake," reflecting the underlying technology used to create them. These highly realistic forgeries are generated by artificial intelligence, specifically through machine learning models that can analyze and reconstruct human faces, voices, and mannerisms with alarming accuracy. Unlike traditional video editing, which requires extensive manual effort, deepfake generation is a largely automated process capable of producing convincing results that can deceive both human observers and conventional security systems.

The core technology often involves a type of machine learning model known as a Generative Adversarial Network (GAN). A GAN consists of two competing neural networks: a "Generator" that creates the fake media and a "Discriminator" that attempts to distinguish it from authentic content. The two networks are trained against each other, with the Generator continuously improving its output to fool the Discriminator. This adversarial process results in increasingly realistic and difficult-to-detect synthetic media, posing a significant challenge for IT security professionals tasked with authenticating digital communications.

How Deepfakes Are Created

The generation of deepfake content is a sophisticated process rooted in machine learning, specifically utilizing generative models like Generative Adversarial Networks (GANs) and autoencoders. At a high level, creating a deepfake involves training a model on large datasets to understand and then replicate human features, mannerisms, and vocal patterns with high fidelity. This process can be broken down into several key stages.

The Technical Workflow of Deepfake Generation

Data Collection and Extraction:

The initial step involves gathering extensive training data. For video deepfakes, this means collecting hundreds or thousands of images and video clips of two individuals: the "source" (whose identity will be imposed) and the "target" (who will be replaced). Automated tools extract frames and isolate faces, creating a robust dataset that captures various angles, lighting conditions, and facial expressions. A similar process is used for audio, where hours of speech are collected to serve as the training foundation.

Model Training with Machine Learning Frameworks:

The core of deepfake creation lies in the training phase, which leverages powerful open-source machine learning frameworks.

TensorFlow and PyTorch:

These platforms provide the fundamental libraries and tools required to build and train complex neural networks. They enable the implementation of encoder-decoder architectures, which are central to face-swapping techniques. The encoder learns to compress facial features from both the source and target into a lower-dimensional latent space, capturing key characteristics. A shared decoder is then trained to reconstruct faces from this latent space.

Training Process:

By training the model on both datasets, the decoder learns to reconstruct the face of the source subject using the identity encoded from the source images, but applied to the expressions and orientation of the target's face. This requires significant computational power, often demanding high-end GPUs, and can take days or even weeks to achieve a convincing result.

Synthesis and Post-Processing:

Once the model is adequately trained, the synthesis phase begins. The model takes the target video footage and, frame-by-frame, replaces the target's face with the synthesized source face. The output is often imperfect, containing visual artifacts, poor blending, or unnatural-looking features. Consequently, post-processing is a critical final step, where creators may use traditional video editing software to refine color grading, smooth edges, and correct inconsistencies to enhance realism.

From Complex Code to Accessible Tools

While the underlying process is complex and resource-intensive, the barrier to entry has been significantly lowered by the development of specialized software. These applications abstract away much of the coding and manual configuration, packaging the deepfake generation workflow into more manageable interfaces. This availability of user-friendly software has been a primary driver in the rapid increase of deepfake content, enabling individuals without a deep background in machine learning to create synthetic media. The next section explores some of the most prominent tools that facilitate this process.

Tools and Software

The proliferation of deepfake technology has been accelerated by the availability of user-friendly tools. While some deepfakes are created using custom code and complex frameworks like TensorFlow and PyTorch, several open-source and commercial applications have lowered the barrier to entry. Popular tools include DeepFaceLab, FaceSwap, and various other mobile applications that offer similar functionalities.

Disclaimer: These tools can be used for legitimate and creative purposes, such as in film production or satire. However, they are also the primary instruments used to create malicious deepfakes for fraud, disinformation, and harassment. A thorough understanding of these tools from a defensive perspective is essential for IT security professionals tasked with protecting their organizations from synthetic media threats.

Different Types of Deepfakes

Deepfake technology is not a monolithic entity; it encompasses a range of techniques used to create various forms of synthetic media. For IT security professionals, understanding these different types is crucial for assessing threat vectors and implementing appropriate countermeasures. Each category presents unique risks and requires distinct detection methodologies.

Video-Based Deepfakes

1. Face Swapping (Identity Replacement): This is the most widely recognized form of deepfake. It involves replacing the face of a person in a video with the face of another. The technology maps the facial movements and expressions of the target individual onto the source face, creating a seamless but fabricated video.

Threat Vector: Can be used to create fraudulent videos of executives making false statements, authorizing unauthorized actions, or engaging in reputation-damaging behavior.

2. Lip-Syncing (Puppeteering): In lip-sync deepfakes, an audio track is used to manipulate the mouth and facial expressions of a person in a video, making them appear to say something they did not. The original video is real, but the speech is fabricated.

Threat Vector: Can be weaponized to create fake CEO announcements, spread disinformation, or generate false evidence in legal disputes.

3. Facial Reenactment (Expression Swapping): This technique transfers the facial expressions and head movements of one person onto another. It allows a puppeteer to control the facial expressions of a target in real-time, creating a "digital puppet."

Threat Vector: Can be used in real-time social engineering attacks, such as vishing (voice phishing) calls where the video feed is manipulated to impersonate a trusted individual.

Audio-Based Deepfakes

Voice Cloning (Voice Synthesis): This technology uses a small audio sample of a person's voice to generate new, synthetic speech that mimics their unique vocal patterns, pitch, and cadence. Just a few seconds of audio can be enough to create a convincing clone.

Threat Vector: A primary tool for sophisticated vishing attacks, where a cloned voice is used to authorize fraudulent financial transfers, reset passwords, or extract sensitive information from employees.

Deepfake Detection Tools

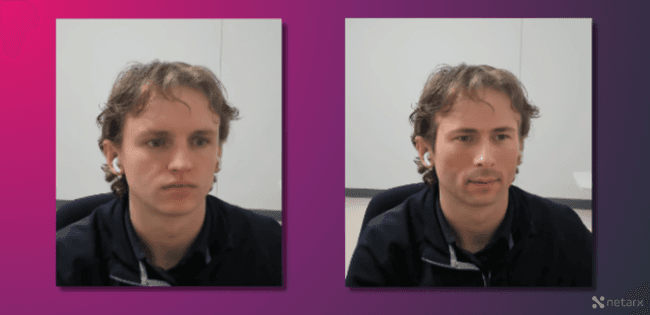

The rapid advancement of synthetic media has created a parallel need for robust detection technologies. As deepfakes become more sophisticated, the ability to distinguish between authentic and manipulated content is critical for mitigating security risks. In response, a new class of specialized tools has emerged, designed to identify the subtle flaws inherent in AI-generated media. Platforms like Netarx are at the forefront of this effort, providing a necessary layer of defense against deceptive content.

These detection systems operate by analyzing digital media for artifacts and inconsistencies that are often imperceptible to the human eye. The core methodologies include:

Inconsistency Analysis:

Detection algorithms scrutinize video frames for inconsistencies in lighting, shadows, reflections, and pixel patterns. For example, a synthesized face may not perfectly match the lighting environment of the original footage, creating subtle discrepancies that a machine can flag.

Behavioral and Physiological Anomaly Detection:

AI models are trained to recognize unnatural biological signals. This includes analyzing blinking patterns, which are often irregular or absent in early deepfakes, as well as unnatural facial movements, head-posing, and eye-gaze tracking that deviate from normal human behavior.

Audio-Visual Synchronization Analysis:

For video deepfakes that include manipulated audio, these tools assess the synchronization between lip movements and the spoken words. Even minor asynchronies can indicate that the audio or video has been altered. The unique acoustic properties of a room are also analyzed to ensure the voice matches the environment.

The development and deployment of these detection tools are essential in the ongoing fight against the malicious use of deepfake technology. They provide organizations with the capability to verify the authenticity of digital communications and protect against attacks like social engineering, disinformation campaigns, and fraud.

Securing Trust in the Age of Deepfakes

The evolution of deepfake technology presents a significant dual-use dilemma. On one hand, it offers remarkable potential for creative expression in film, art, and entertainment. On the other, it introduces formidable threats to cybersecurity, personal reputation, and information integrity. The accessibility of sophisticated creation tools means that IT security professionals must now contend with a landscape where seeing—or hearing—is no longer believing.

The journey from complex machine learning frameworks to user-friendly applications has democratized the ability to create highly realistic synthetic media. However, this same technological progression has spurred the development of critical countermeasures. The emergence of advanced detection platforms, including solutions like Netarx, signifies a crucial response from the security community. By leveraging AI to fight AI, these tools provide an essential mechanism for verifying digital content and safeguarding against manipulation. Ultimately, maintaining trust in a digital world requires a proactive and adaptive security posture, one that acknowledges the risks of synthetic media while embracing the innovation required to counter them.